Purpose:

This article is to tell you how to configure a Single Node Hadoop Setup on MacOS to run some basic MapReduce job from scratch.

Step1:

Download a stable Hadoop released from Apache Server. I use hadoop-1.0.3.

Use the following command to extract the files into your favorite directory.

make sure your working directory is under ~/SOME/hadoop-1.0.3

Step2:

Add the following line into the file ./conf/hadoop-env.sh

Step1:

Download a stable Hadoop released from Apache Server. I use hadoop-1.0.3.

Use the following command to extract the files into your favorite directory.

tar xzvf hadoop-1.0.3.tar.gz

make sure your working directory is under ~/SOME/hadoop-1.0.3

Step2:

Add the following line into the file ./conf/hadoop-env.sh

# set JAVA_HOME in this file export JAVA_HOME=/System/Library/Frameworks/JavaVM.framework/Versions/CurrentJDK/Home #suppress wrong output info due to JRE SCDynamic export HADOOP_OPTS="-Djava.security.krb5.realm= -Djava.security.krb5.kdc="

Step3:

Modify the following files:

conf/core-site.xml

note: the key {hadoop.tmp.dir} is used to set the location for meta data file of hdfs. conf/hdfs-site.xmlhadoop.tmp.dir /Users/YOURNAME/tmp1 A base for other temporary directories. fs.default.name hdfs://localhost:9000

conf/mapred-site.xmldfs.replication 1

mapred.job.tracker localhost:9001

Step 4:

Enable Remote Login for your MacOS. Go to SystemPreference->Sharing check the Remote Login Issue the following command in your terminal:

$ ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa $ cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys $ ssh localhostIf it works well without any prompt after your issuing last command, it means OK.

Step5:

format a new distribute-filesystem and start the hadoop framework:

$ bin/hadoop namenode -format $ bin/start-all.shAt this time, you should find several newly-added directory in the path /Users/YOURNAME/tmp1 which you set the value for the key {hadoop.tmp.dir}.

Now, open your browser and visit the following the address

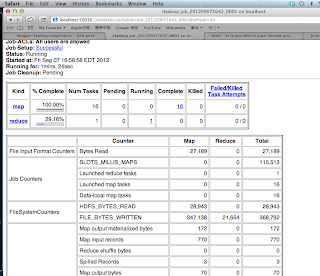

NameNode - http://localhost:50070/

JobTracker - http://localhost:50030/

You should see a page like this:

Note: there should be 1 live node existing if everything goes well. Otherwise, you must restart the hadoop daemon. However, sometime live node is alway 0 which means you can not execute any mapreduce job. From my experience, a effective way is by deleting all directories generated by hadoop in the path {hadoop.tmp.dir}. Then run the following commands again

$ bin/hadoop namenode -format $ bin/start-all.sh

Step6:

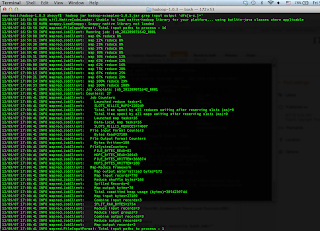

Test your mapreduce job execution by issuing the following commands:

$ bin/hadoop fs -put conf input $ bin/hadoop jar hadoop-examples-*.jar grep input output 'dfs[a-z.]+'You can monitor the job process through your terminal or web browser by visiting http://localhost:50030/